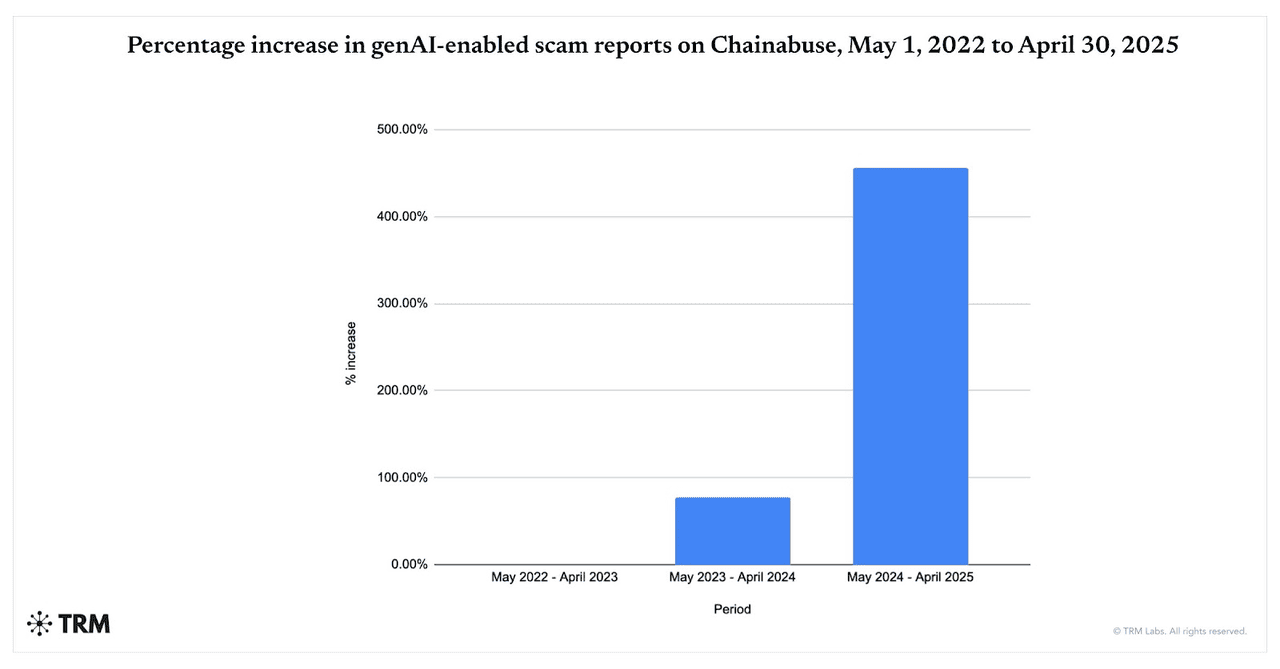

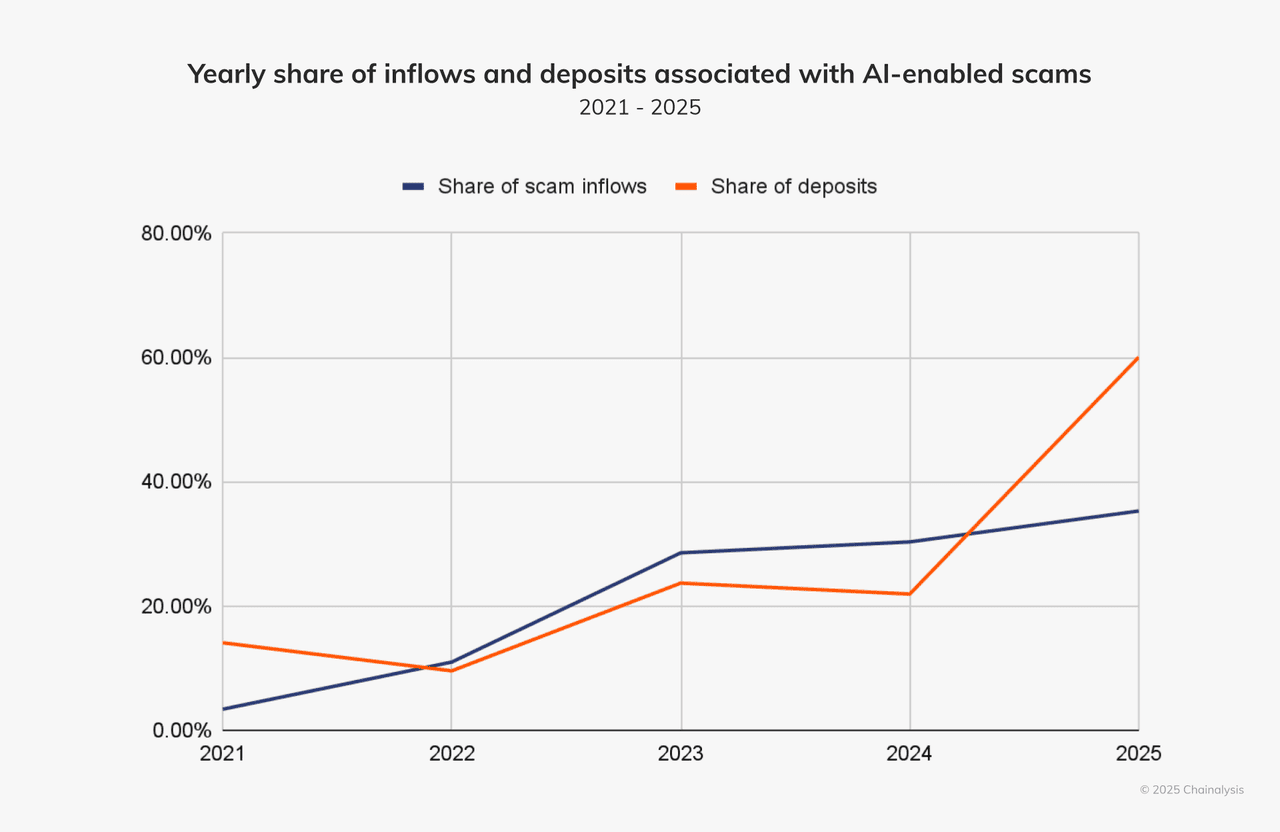

AI-powered scams are accelerating. Between May 2024 and April 2025, reports of gen-AI–enabled scams jumped 456%, per TRM Labs’ Chainabuse data. Chainalysis also finds that 60% of deposits into scam wallets now flow to scams that leverage AI tools, up sharply from 2024, underscoring how widely fraudsters are adopting LLMs, deepfakes, and automation.

Generate AI-enabled scams surge from 2022 to 2025 | Source: TRM Labs

What’s driving the surge in AI-powered crypto scams in 2025? AI delivers speed, scale, and realism: one operator can spin up thousands of tailored phishing lures, deepfake videos/voices, and brand impersonations in minutes, content that evades legacy filters and convinces victims. As of November 2025, new attack surfaces like prompt-injection against agentic browsers and AI copilots have raised the risk that malicious webpages or screenshots can hijack assistants connected to wallets or accounts.

And crypto remains a prime target: fast-moving markets, irreversible transactions, and 24/7 on-chain settlement make recovery hard, while broader 2025 crime trends from hacks to pig-butchering show the crypto ecosystem’s overall risk rising.

In this guide, you’ll learn what AI crypto scams are, how they work, and how to stay safe when using trading platforms, including BingX.

What Are AI‑Powered Crypto Scams and How Do They Work?

AI-powered crypto scams use advanced

artificial intelligence techniques to deceive you, stealing your money, private keys, or login credentials. These scams go far beyond the old-school

phishing schemes. They’re smarter, faster, and far more believable than ever before.

Traditional crypto fraud typically involved manual tactics: poorly written emails, generic social-media giveaways, or obvious impersonation. Those were easier to spot if you knew what you were looking for.

AI-enabled crypto scams are growing at an explosive pace. TRM Labs reports a 456% surge in generative-AI scam activity between May 2024 and April 2025, while Chainalysis finds that about 60% of all deposits into scam wallets now come from operations using AI tools. In parallel, The Defiant notes that AI-driven crypto scams grew nearly 200% year-over-year as of May 2025, showing how quickly fraudsters are adopting deepfakes, automated bots, and AI-generated phishing to target users at scale.

But now AI changes the game. Fraudsters are leveraging generative AI, machine-learning bots, voice-cloning and deepfake video to:

1. Create Realistic and Personalized Content That Feels Human

AI tools can generate phishing emails and fake messages that sound and read like they came from a trusted friend, influencer, or platform. They use flawless grammar, mimic speech patterns, and even insert personal touches based on your online behaviour. Deepfake videos and voice clones push this further: you might genuinely believe a CEO, celebrity or acquaintance is speaking to you.

2. Launch Massive Attacks at Lightning Speed

With generative AI and large language models (LLMs), scammers can produce thousands of phishing messages, fake websites, or impersonation bots in seconds. These messages can be localized, personalized, distributed across email, Telegram, Discord, SMS and social-media. What once required dedicated teams can now be done by a single operator with the right tools.

3. Bypass Traditional Filters and Security Systems

Older fraud detection systems looked for spelling mistakes, obvious social-engineering cues, reused domains. AI-powered scams avoid these traps. They generate clean copy, rotate domains, use invisible/zero-width characters, mimic human behaviour, and combine channels, such as voice, video, and chat. According to analytics firm Chainalysis, about 60% of all deposits into scam wallets now flow to scams that leverage AI tools.

Inflows and deposits from AI scams are on the rise | Source: Chainalysis

These attacks are more convincing precisely because they mimic how real people behave, speak, and write. They’re easier to scale and harder to detect. For example: using a tool like WormGPT or FraudGPT, one attacker can launch thousands of very credible scams in minutes.

Why Is Crypto an Ideal Target for AI Scams?

The crypto market is especially vulnerable to this new generation of scams: transactions are fast, often irreversible, and users are frequently outside traditional regulatory or consumer-protection frameworks. Add in a global audience, multiple channels such as social, chat, forums, and high emotion/greed triggers, e.g., “double your crypto”, “exclusive

airdrop”, “CEO endorsement”, and you have an environment where AI-powered scammers thrive.

What Are the Common Types of AI-Driven Crypto Scams?

AI-powered crypto scams now mix deepfakes, large language models (LLMs), and automation to impersonate people, mass-produce phishing, and bypass legacy filters. Let’s explore the most common types and real-world cases that show how dangerous they’ve become.

1. Deepfake Scams: Audio and Video Impersonation

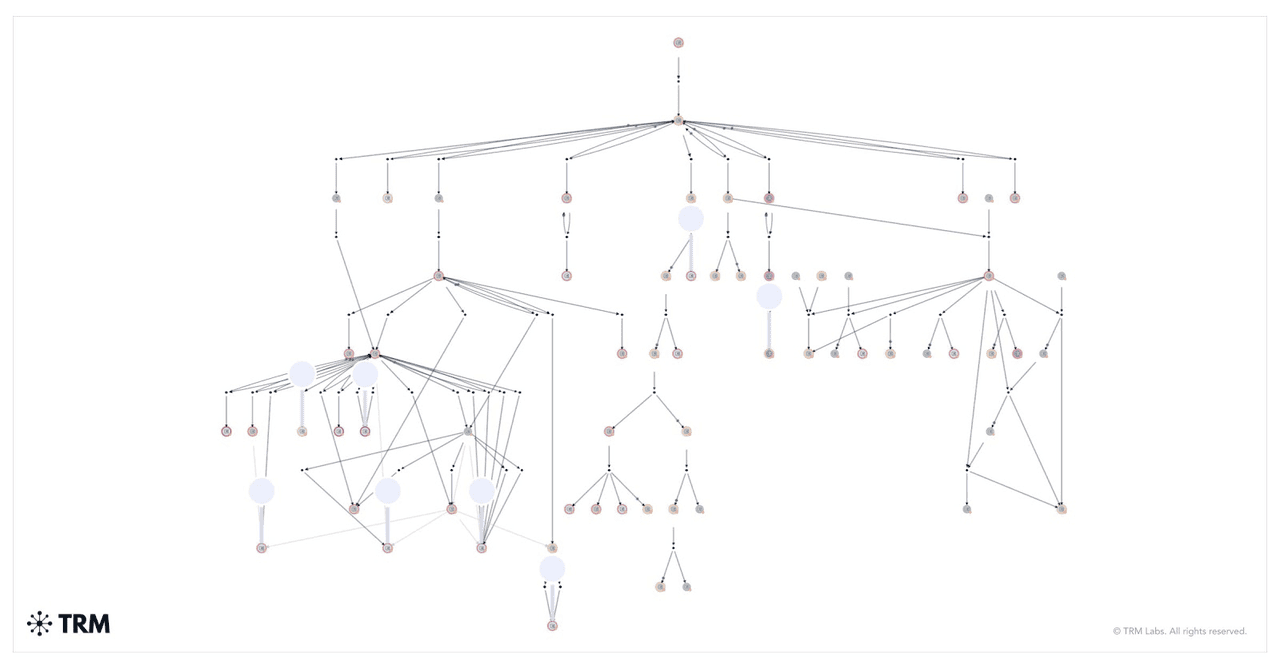

How the funds from a deepfake giveaway scam were moved | Source: TRM Labs

Deepfake scams use AI-generated videos or audio clips to impersonate public figures, influencers, or even executives from your own company. Scammers manipulate facial expressions and voice patterns to make the content seem real. These fake videos often promote fraudulent crypto giveaways or instruct you to send funds to specific wallet addresses.

One of the most alarming cases happened in early 2024. A finance employee at a multinational company in Hong Kong joined a video call with what appeared to be the company’s CFO and senior executives. They instructed him to transfer $25 million. It was a trap. The call was a deepfake, and every face and voice was generated by AI. The employee didn’t know until it was too late.

This same tactic is being used to impersonate tech leaders like Elon Musk. In one scam, deepfake videos of Musk promoted a

Bitcoin giveaway. Viewers were told to send

BTC to a

wallet and get double the amount back. Chainalysis tracked a single wallet that collected millions of dollars during a fake livestream on YouTube.

2. AI-Generated Phishing

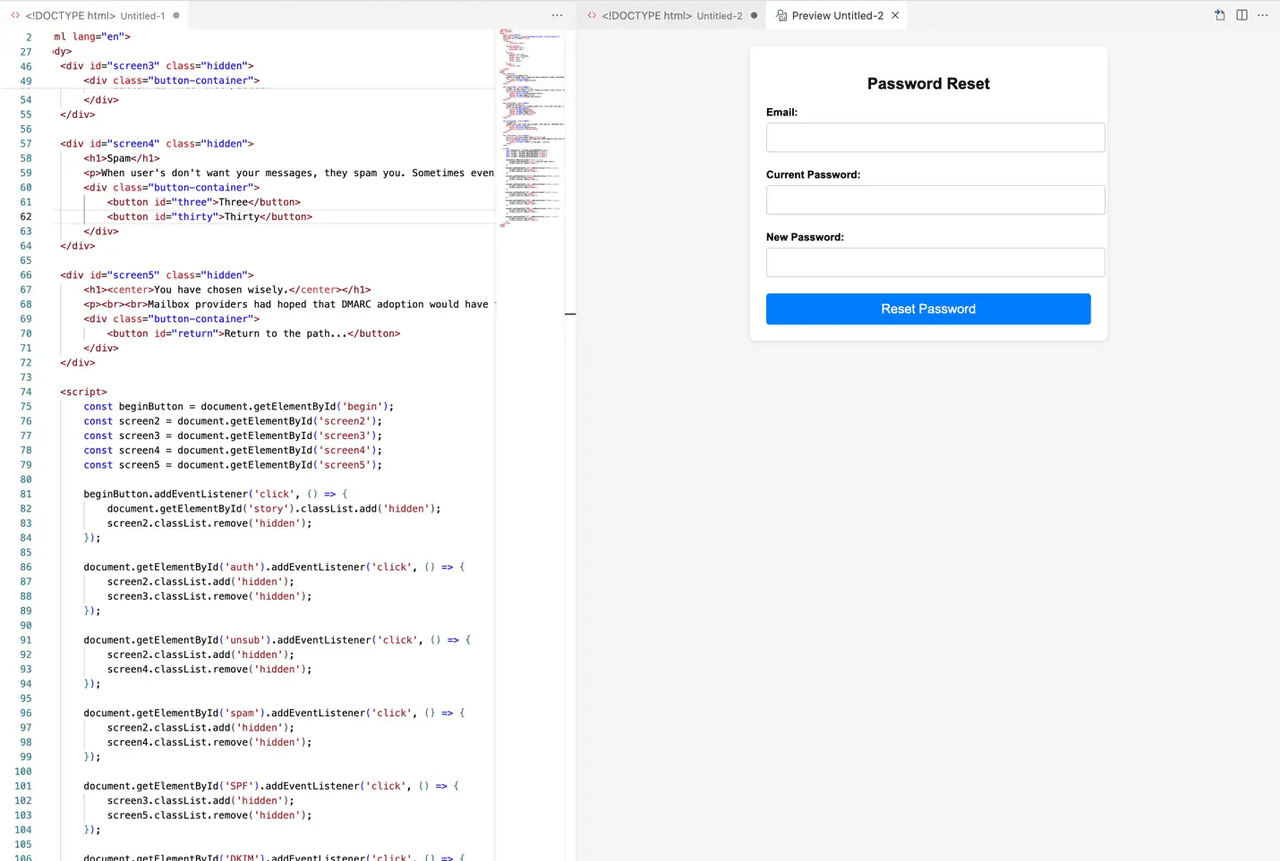

Example of an AI-generated phishing website | Source: MailGun

Phishing has evolved with AI. Instead of sloppy grammar and suspicious links, these messages look real, and they feel personal. Scammers use AI to gather public data about you, then craft emails, DMs, or even full websites that match your interests and behavior.

The scam might come through Telegram, Discord, email, or even LinkedIn. You could receive a message that mimics

BingX support, urging you to “verify your account” or “claim a reward.” The link leads to a fake page that looks nearly identical to the real thing. Enter your info, and it’s game over.

TRM Labs reported a 456% increase in AI-generated phishing attempts in just one year. These attacks now use large language models (LLMs) to mimic human tone and adapt to different languages. Some scammers even use AI to bypass

KYC checks, generate fake credentials, or simulate live chats with “support agents.”

3. Fake AI Trading Platforms & Bots

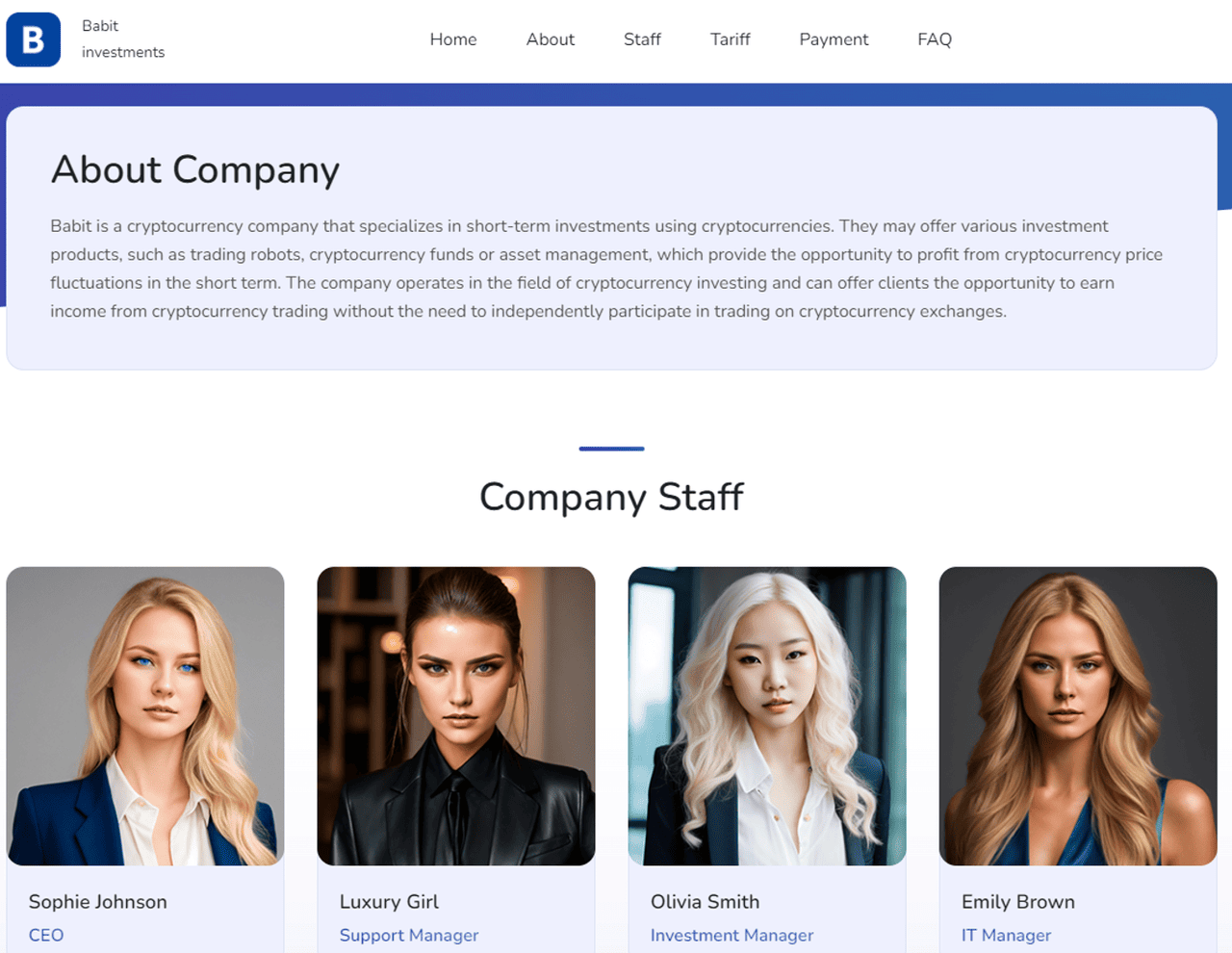

How MetaMax used AI to create a fake company with fake employees | Source: TRM Labs

Scammers also build entire trading platforms that claim to use AI for automatic profits. These fake tools promise guaranteed returns, “smart” trade execution, or unbeatable success rates. But once you deposit your crypto, it vanishes.

These scams often look legitimate. They feature sleek dashboards, live charts, and testimonials, all powered by AI-generated images and code. Some even offer demo trades to fake performance. In 2024, sites like MetaMax used AI avatars of fake CEOs to gain trust and draw in unsuspecting users.

In reality, there’s no AI-powered strategy behind these platforms, just a well-designed trap. Once funds enter, you’ll find you can’t withdraw anything. Some users report their wallets getting drained after connecting them to these sites. AI bots also send “signals” on Telegram or Twitter to push you toward risky or nonexistent trades.

4. Voice Cloning and Real-Time Calls

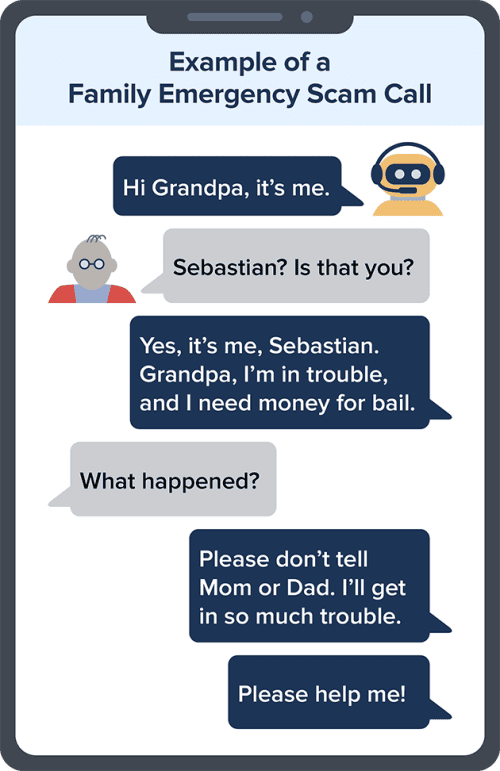

Example of an AI voice cloning scam | Source: FTC

AI voice cloning makes it possible for scammers to sound exactly like someone you know. They can recreate a CEO’s voice, your manager’s, or even a family member’s, then call you with urgent instructions to send crypto or approve a transaction.

This technique was used in the $25 million Hong Kong heist mentioned earlier. The employee wasn’t just tricked by deepfake video; the attackers also cloned voices in real time to seal the deception. Just a few seconds of audio is enough for scammers to recreate someone’s voice with shocking accuracy.

These calls often come during off hours or emergencies. You might hear something like: “Hey, it’s me. Our account is frozen. I need you to send

USDT now.” If the voice sounds familiar and the request is urgent, you might not question it, especially if the number appears legit.

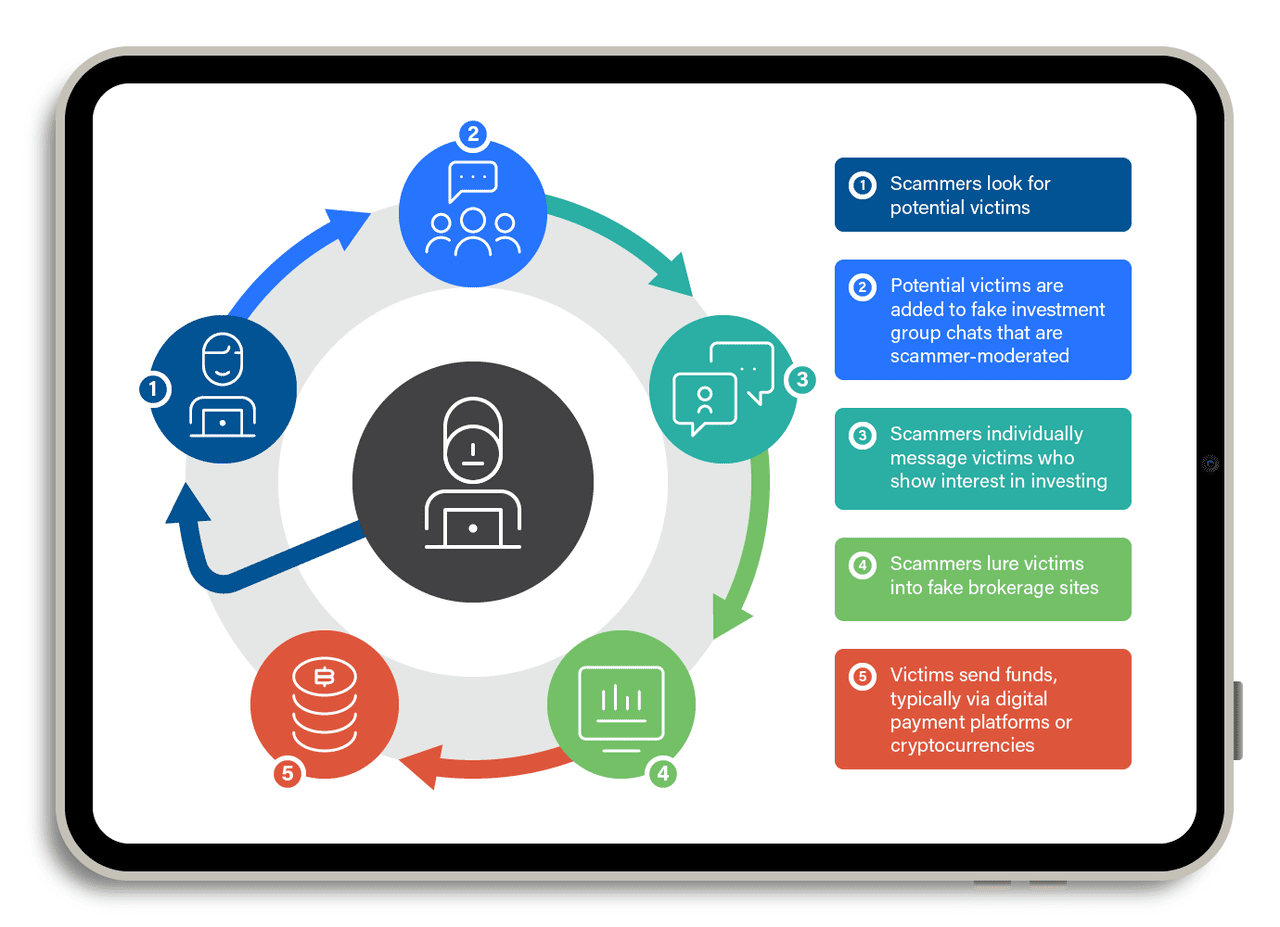

5. Pig-Butchering with AI

How a pig-butchering scam works | Source: TrendMicro

“Pig butchering” scams are long cons. They involve building trust over time, maybe weeks or even months. Scammers pretend to be a romantic interest or business partner, often using dating apps, Telegram, or WeChat. Once they gain your confidence, they convince you to invest in a fake crypto platform.

Now, they’re using AI chatbots to scale this strategy. These bots hold natural, flowing conversations. They follow up regularly, answer your questions, and even offer life advice. It’s all scripted, but it feels real.

In 2024, Chainalysis reported that AI-assisted pig-butchering scams brought in over $9.9 billion globally. Some scammers even use deepfakes for video calls, showing a friendly face that seems human. Victims deposit small amounts, see fake gains, and then invest more, until the site disappears or withdrawals are blocked.

These scams all rely on one thing: your trust. By mimicking real people, platforms, and support teams, AI tools make it harder to tell what’s real and what’s fake. But once you know how these scams work, you’re much better prepared to stop them. Stay alert, and don’t let AI take your crypto.

6. Prompt-Injection Against Agentic Browsers and Wallet-Connected AIs

A new threat in 2025 involves prompt-injection, where a malicious website, image, or text “hijacks” an AI agent connected to a browser, email, or even a crypto wallet. Because some AI browsers and wallet copilots can read data, summarize pages, or take actions on a user’s behalf, a hidden instruction can force the agent to leak private information or initiate unsafe transactions.

Security researchers quoted by Elliptic and multiple industry blogs warn that this risk rises as more AIs gain permissions linked to funds. For example, a prompt can instruct the AI to “send assets only to <attacker wallet>,” meaning any future transaction routed through the agent could be diverted without the user noticing. Since users often trust AI to “automate” tasks, this attack surface is growing faster than traditional phishing and is harder to detect because nothing “looks” suspicious to the human victim.

7. KYC Bypass and Fake IDs at Exchanges and VASPs

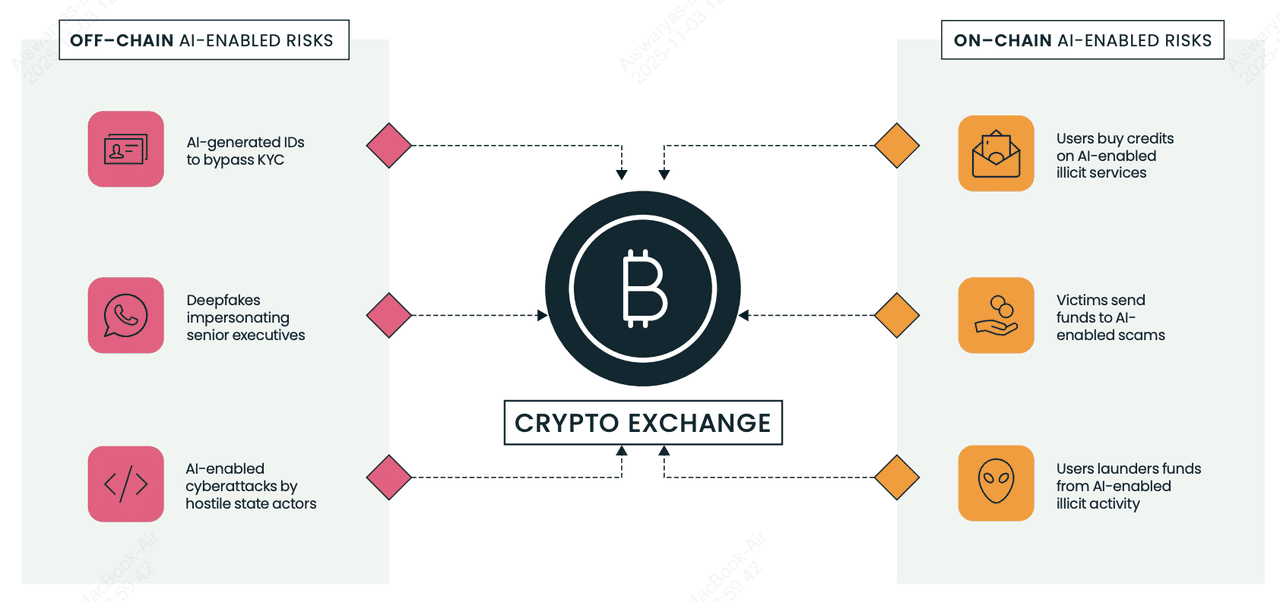

On-chain and off-chain AI-related risks for crypto exchanges and VASPs | Source: Elliptic

Fraud groups now use AI-generated selfies, passports, and driver’s licenses to bypass KYC checks at crypto exchanges (

VASPs) and open mule accounts for laundering stolen funds.

Elliptic’s 2025 Typologies Report highlights marketplaces selling AI-enhanced fake IDs and “face-swap” selfie kits that can pass automated verification if a platform lacks advanced detection. Once approved, criminals move stolen crypto through these accounts in small “test transactions,” then escalate activity to larger transfers, often to addresses that analytics tools flag as high-risk.

Red flags include minor inconsistencies across ID documents, sudden spikes in volume after account creation, and unexplained connections to wallets previously tied to scams. For beginners, this matters because even legitimate platforms can be abused in the background, and exchanges now rely on blockchain analytics to freeze or trace funds before they disappear.

8. Social Botnets on X (Twitter)

Crypto scammers operate massive botnets on X that look human, reply to posts instantly, and push wallet-drainer links or fake airdrops. According to security analysts cited by Chainalysis and industry media, AI makes these bots harder to spot, no repeated text, better grammar, localized slang, even personalized replies. A common tactic called “reply-and-block” has bots reply to real users (boosting engagement signals), then block them, which may reduce the victim’s visibility while amplifying scam posts.

These networks often impersonate founders, influencers, or exchange support, then bait users into signing malicious smart contracts. Because crypto users rely on X for real-time news, the bots exploit urgency and fear of missing out. For beginners: never trust links in replies, especially if they promise free tokens, guaranteed returns, or require wallet approvals; most high-profile “giveaways” on X are scams.

How to Defend Yourself from AI Scams

AI scams are getting smarter, but you can stay one step ahead. Follow these tips to protect your crypto and peace of mind.

1. Enable 2FA, Passkeys, or a Hardware Key: Two-factor authentication (2FA) blocks most account-takeover attempts because scammers need more than your password. Cybersecurity studies such as the Google Security Report show that account breaches drop by over 90% when hardware-based 2FA is enabled. If your platform supports

Passkeys, turn them on; they replace passwords entirely with a cryptographic login tied to your device, making phishing attacks almost useless because there’s no password to steal. Tools like

Google Authenticator, built-in Passkeys on iOS/Android, or a YubiKey make it nearly impossible for attackers to log in, even if they trick you with AI-generated phishing emails. Always enable 2FA or Passkeys on BingX, email, exchanges, and wallets that support them.

2. Verify Links and URLs Carefully: A huge share of AI scams start with a fake link. Chainalysis notes that AI-generated phishing websites are one of the fastest-growing scam vectors because they look nearly identical to real platforms. Before clicking, hover to preview the URL and make sure it matches the official BingX domain. Bookmark the login page and avoid links sent through email, Telegram, Discord, or Twitter replies; scammers often spoof support accounts or airdrop pages to steal logins and wallet info.

3. Be Skeptical of Anything That Sounds Too Good: If a bot claims “guaranteed returns,” “risk-free income,” or “double your crypto,” it’s a scam. Real trading, even with AI, never guarantees profit. Chainalysis reported that fake trading platforms and “AI signal bots” stole billions in 2024–25 by promising returns that no real system can deliver. If something sounds too good to be true in crypto, it always is.

4. Never Share Seed Phrases or Private Keys: Your seed phrase controls your wallet. Anyone who asks for it is trying to steal your crypto. No legitimate exchange, project, or support team will request it, not even once. Many AI-driven phishing scams now ask users to “verify” their wallet or “unlock bonus rewards” using a seed phrase. The moment you type it into a fake website, your assets are gone. Treat your seed phrase like your digital identity: private, offline, and never shared.

5. Use Official BingX Support Only: Scammers frequently impersonate exchange support agents through email or social media. They will claim there is a problem with your withdrawal or offer “assistance” to fix an account issue — then send you a malicious link. Always access support through the official BingX website or app. If someone messages you first, assume it’s a scam. Elliptic and TRM Labs note that impersonation attacks have spiked as AI tools make fake accounts harder to distinguish from real ones.

6. Store Long-Term Crypto in a Hardware Wallet: Hardware wallets like Ledger and Trezor keep your private keys offline, which protects your funds even if you fall for a phishing link or malware attack. Because the device must physically approve each transaction, scammers can’t steal your crypto remotely. Research from multiple crypto-security audits shows that cold storage remains the most effective method to protect long-term holdings, especially when markets are targeted by AI-driven wallet drainers.

7. Stay Informed with BingX Learning Resources: AI scams evolve fast, and staying educated is one of the strongest defenses. TRM Labs found a 456% rise in AI-enabled scams year-over-year, which means new tactics appear constantly. Reading trusted security guides, scam alerts, and phishing warnings helps you spot red flags before you become a victim.

BingX Academy regularly publishes beginner-friendly safety tips so users can trade confidently even in a fast-changing environment.

Conclusion and Key Takeaways

AI-powered crypto scams are spreading because they’re cheap, scalable, and convincing, but you can still stay safe. Turn on 2FA, avoid clicking unverified links, store long-term funds in a hardware wallet, and never share your seed phrase. If anyone promises guaranteed profits or sends you a suspicious link, walk away. And as scammers evolve, your best defense is knowledge. Follow BingX Academy for ongoing security tips, scam alerts, and crypto-safety education so you can protect your assets in the AI era.

Related Reading